This is the first post in a longer series on service oriented software architecture. The story begins with a retrospective of a large software project I was part of at the beginning of the century. The task was to implemented a new merchandise software suit for a large European retailer in line with their “Delta” service architecture.

Delta

The Delta service architecture was based on healthy design principles such as encapsulation, autonomy, independent deployment and contracts. The principles had been used on the business level and created nice fine granular, independent services with names like Merchandising Store, Assortment, Promotion, Retail Price, Store Replenishment, each service being responsible for a limited but clearly defined business capability. Remembering correctly there was more than 20 services to be made for the whole suite including buying and selling, representing a functional decomposition of the business.

Since all services should be deployed in the same Enterprise Java production environment the team decided on a product line approach, and established a set of principles supported by common components such as the Object Relational Bridge for object persistence, JMS (Java Message Service) for asynchronous contracts processing, and implementation of domain logic using a rich object model guarded by transactional boundaries, an approach that turned out to work reasonable well from a technical point of view. Blueprint shown in figure 2.

Today some of these choices might look weird, but there was no Cloud, no Spring framework, and no Docker when this was made. The client had in addition made several architectural decisions such as choosing application server, database and message broker, choices that reduced our ability to exploit the technology as we could have by starting out with a open source strategy.

One lesson learned here is, if possible, to avoid premature, political high profile commercial technology decisions. Such decisions always involve senior management as serious money change hands, and at the same time they need to be architectural healthy to stand their time.

Core Domain

The development team was new to the retail domain, and therefore we decided to begin with he simplest service, Retail Store. In retrospect this was a bad decision because it made us begin at the fringe of the domain instead of at the core.

Item is the core of retail. Deciding what items to sell in a particular store is the essence of the retailing business. A can of beer, the six pack and the case of six packs area all items, the same is a bundle of a pizza and a bottle of water. The effect being that there are tens of thousand items in play, some of them are seasonal, others geographical, and others are bundles and promotions such as 3 for 2.

Items can be nested structures organised into categories such as diary, meat, fish, fruit, and beverage. Which items are found in a particular store is defined by store type or format, size, season and geography. A simplified domain model is found in figure 3.

When the work began we had a high level business service architecture, but we had no domain model showing the main business objects and their relationships to guide the work forward. The effect was that the team learnt the domain the hard way as it dug itself from the edges toward the core.

A fun fact is that the team met Eric Evans when he presented his book on Domain Driven Design in 2004 discovering that we had faced many of the same challenges as him. The difference was that he had been able to articulate those challenges and turn them into a book. Had the book been out earlier we would have been in a better position to ask hard questions related to our own approach.

Learnings

I have identified five major learnings from this endeavour that are relevant for those who considers to use Microservices as architectural style for their enterprise business application. At first glance the idea of small independent services sounds great, but it comes with some caveats and food for thought.

Firstly, a top down business capability based service decomposition without a thoroughly bottom-up analysis of the underpinning domain model is dangerous. In Domain-Driven Design speak this mean that the identification of bounded contexts require a top-dow, bottom-up, middle-out strategic design exercise since business capability and domain model boundaries are seldom the same. Cranking out those boundaries early is crucial for the systems architectural integrity. Its the key to evolution.

Secondly, begin with the core of the domain and work toward the edges. Retail is about the management of items, how to source them, and how to bring them to the appropriate shelves with the correct price given season, geography and campaigns. Beginning with the Retail Store service because it was simple was ok as a technical spike, but not as strategy.

Thirdly, fine granular services leads to exponential connectivity growth and a need to copy data between services. The number of connections in a graph grows according to f(n) = (n(n-1)/2). Therefore 5 services have 10 connections. Doubling to 10 services gives 45 connections, and a doubling to 20 services gives 190 connections and so on. The crux is to understand how many connections must be operational for the system as a whole to work and to balance this out with a healthy highway system providing the required transport capacity and end point management.

Fourthly, the development team was happy when the store service worked, but a single working service at the fringe of the domain does not serve the business. The crucial question to ask is what set of functionality must be present for the system as a whole to be useful? The ugly worst case answer to that is all, including a cross cutting user interface that we leave out for now. The lesson is that Microservices might give developers a perception of speed, but for the business who needs the whole operational the opposite might be the case. Therefore should the operational needs drive the architecture as functional wholes must be put into production.

Fifthly, the service architecture led to a distributed and fragmented domain model since service boundaries was not aligned with the underpinning domain model seen in figure 3. Price, Assortment and Promotion has the same data foundation and share 80% of the logic that we ended up replicating across those services.

To sum it all up, understand the whole before the parts and then carefully slice the whole into cohesive modules with well defined boundaries remembering that the business make their money from an operational whole, not from fragmented services that was easy to build.

Microservices

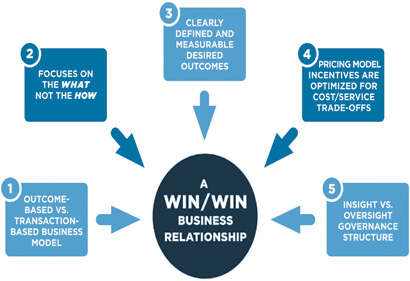

Microservices is an architectural style introduced around 2013 that promotes small autonomous services that work together, modelled around a business domain and supported by a set of principles or properties such as culture of automation, hide implementation details, decentralise all the things, independent deployment, consumer first, isolate failure, principles that are difficult to argue against, while a literal interpretation might cause more harm than needed.

Philosophically Microservice follows the principles of Cartesian reductionism, as did Lord Nelson in his divide and conquer strategy. The big difference, Lord Nelson’s task was to dismantle the French fleet, not to build a new fleet from the rubbles, and this difference coins IMHO the major challenge with the Microservice style. Its aimed at independent development and deployment of the parts pushing the assembly of the whole to operations. Some might argue that its then fixed by the DevOps model, but if there are 20 services supported by 20 teams the coordination problem is invitable.

Conclusion

Service oriented architectures and the Microservice architectural style offers opportunities for those who need independent deployment. For applications who do not need independent deployment a more cohesive or monolitic deployment approach might be better. Independent of style, the crux is to get the partitioning of the domain right at design time and in operations. The key question to answer is what must be operational as a functional whole?

This mean that the design time boundaries and the operational boundaries are not identical and for a solution to be successful the operational boundary is the most important. That said, to secure healthy operational boundaries, the internals of the system need to be well designed. Approaching a large complex domain with transactional scripts will most likely create problems.

In the next post the plan is to address how data management can be moved out of the functional services enabling data less services along the lines that data ages as wine, while software ages as fish …

See you all next time, any comments and questions are more than welcome.